You may wish to compare the effects of different establishment dates, different seasons or different locations on disease levels. This vignette details how you can automate several runs of {epicrop} in R and visualise them.

Fetching NASA POWER data for multiple seasons

Start by creating a list of the dates representing the seasons that

you want to simulate. In this case, since we specify the duration of the

season, there is no need to explicitly state the end-date, that will

automatically be determined by get_wth() with the

duration argument that we pass along in the

purrr::map(). You can use as many start dates, representing

as many seasons as you desire, but for the purposes of speed in this

vignette, only two will be used.

Use build_epicrop_emergence() and

fetch_epicrop_weather_list() to fetch the weather data from

the POWER API. In this example

we will use the IRRI Zeigler Experiment Station coordinates as shown in

the example for get_wth().

library(epicrop)

years <- 2000:2001

emergence_date <- "06-30"

duration <- 120L

ymd <- build_epicrop_emergence(years = years, month_day = emergence_date)A helper function, fetch_epicrop_weather_list(), is

provided to simplify fetching multiple years of weather data from NASA

POWER that uses very similar arguments to get_wth(). Note

that since we only need the starting date as we have supplied

duration, that the argument is rather

start_date, a single value, not dates. The

function uses the duration value to fetch the necessary

weather data for all years and start dates inclusive of the end of the

last season of the last start date.

wth_list <- fetch_epicrop_weather_list(

years = years,

lonlat = c(121.255669, 14.16742),

start_date = emergence_date,

duration = duration

)Using a Helper Function to Model Several Seasons

Now that we have a list of weather data for two seasons we will use

run_epicrop_model() now to run

bacterial_blight() for two seasons and create a single data

frame of the two seasons.

bb_2_seasons <- run_epicrop_model(model = bacterial_blight,

emergence = ymd,

wth_list = wth_list,

window_days = duration)

bb_2_seasons

#> Key: <emergence, AUDPC>

#> emergence AUDPC

#> <epicrop.emerge> <num>

#> 1: 2000-06-30 12.638149

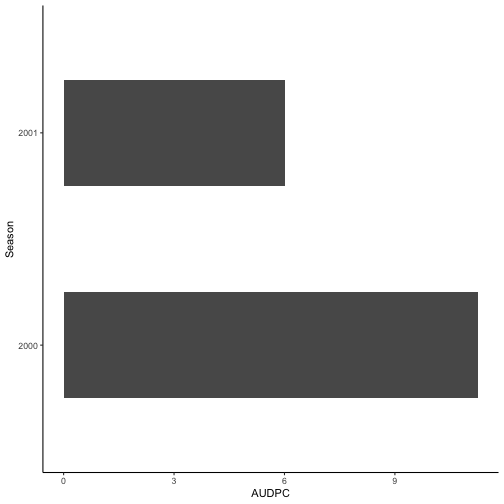

#> 2: 2001-06-30 7.132555Visualising differences in AUDPC between seasons

A simple bar chart created using {ggplot2} is an effective way to visualise the difference between the two seasons.

library(ggplot2)

ggplot(bb_2_seasons,

aes(y = as.factor(emergence),

x = AUDPC)) +

geom_col(width = 0.5,

orientation = "y") +

ylab("Emergence (YYYY-MM-DD)") +

xlab("AUDPC") +

theme_classic()

Simulating multiple seasons and establishment dates

Single Site with Multiple Dates

A helper function, run_epicrop_model() is provided to

simplify running multiple establishment dates.

years <- 2001:2020

month_day <- c("-06-01", "-06-14", "-06-30")

lonlat <- c(121.255669, 14.16742)

duration <- 120L

emergence <- build_epicrop_emergence(years, month_day)

# select 200 days duration to allow for multiple seasonal runs on same data

wth_list <- fetch_epicrop_weather_list(years = years,

lonlat = lonlat,

start_date = month_day,

duration = duration)

run_epicrop_model(bacterial_blight,

emergence,

wth_list,

window_days = duration,

output = "audpc")

#> Key: <emergence, AUDPC>

#> emergence AUDPC

#> <epicrop.emerge> <num>

#> 1: 2001-06-01 14.131715

#> 2: 2001-06-14 9.151969

#> 3: 2001-06-30 7.132555

#> 4: 2002-06-01 14.943097

#> 5: 2002-06-14 13.195117

#> 6: 2002-06-30 15.009709

#> 7: 2003-06-01 13.378803

#> 8: 2003-06-14 14.130203

#> 9: 2003-06-30 17.226754

#> 10: 2004-06-01 12.862026

#> 11: 2004-06-14 14.450173

#> 12: 2004-06-30 12.391271

#> 13: 2005-06-01 16.170222

#> 14: 2005-06-14 15.617532

#> 15: 2005-06-30 16.970441

#> 16: 2006-06-01 16.577408

#> 17: 2006-06-14 15.807245

#> 18: 2006-06-30 14.780878

#> 19: 2007-06-01 9.387853

#> 20: 2007-06-14 7.646396

#> 21: 2007-06-30 11.882833

#> 22: 2008-06-01 10.742961

#> 23: 2008-06-14 10.728721

#> 24: 2008-06-30 13.153549

#> 25: 2009-06-01 6.040063

#> 26: 2009-06-14 11.356032

#> 27: 2009-06-30 9.117641

#> 28: 2010-06-01 15.149563

#> 29: 2010-06-14 17.895281

#> 30: 2010-06-30 17.669956

#> 31: 2011-06-01 14.512311

#> 32: 2011-06-14 16.732625

#> 33: 2011-06-30 19.958490

#> 34: 2012-06-01 17.156552

#> 35: 2012-06-14 18.759039

#> 36: 2012-06-30 19.580385

#> 37: 2013-06-01 17.394346

#> 38: 2013-06-14 18.431213

#> 39: 2013-06-30 19.381236

#> 40: 2014-06-01 11.391712

#> 41: 2014-06-14 11.564790

#> 42: 2014-06-30 10.557649

#> 43: 2015-06-01 6.669598

#> 44: 2015-06-14 10.184387

#> 45: 2015-06-30 14.403355

#> 46: 2016-06-01 11.993649

#> 47: 2016-06-14 9.168104

#> 48: 2016-06-30 10.740988

#> 49: 2017-06-01 16.609637

#> 50: 2017-06-14 15.192003

#> 51: 2017-06-30 13.617421

#> 52: 2018-06-01 14.858372

#> 53: 2018-06-14 17.450385

#> 54: 2018-06-30 13.319767

#> 55: 2019-06-01 12.141632

#> 56: 2019-06-14 12.368693

#> 57: 2019-06-30 12.987964

#> 58: 2020-06-01 9.041934

#> 59: 2020-06-14 7.628714

#> 60: 2020-06-30 11.703225

#> emergence AUDPC

#> <epicrop.emerge> <num>Multiple Sites and Seasons

Multiple locations can also be simulated using

fetch_epicrop_weather_list() and

run_epicrop_model(). Here Septoria tritici blotch is

simulated for three locations in Western Australia and New South Wales

over two seasons and two planting dates with a 240 day growing

season.

# set up the dates

years <- 2020:2021

start_date <- c("04-15", "05-01")

# using a list of multiple locations

locs <- list(

"Merredin" = c(x = 118.28, y = -31.48),

"Corrigin" = c(x = 117.87, y = -32.33),

"Tamworth" = c(x = 150.84, y = -31.07)

)

wth_list <- fetch_epicrop_weather_list(

lonlat = locs,

start_date = start_date,

duration = 240L,

years = years,

mode = "cross"

)

emergence <- build_epicrop_emergence(years, start_date)Examples of how to run the model sequentially and in parallel.

run_epicrop_model(s_tritici_blotch,

emergence,

wth_list,

window_days = 240L,

output = "audpc")

#> emergence AUDPC location

#> <epicrop.emerge> <num> <char>

#> 1: 2020-04-15 0.9981608 Merredin

#> 2: 2020-05-01 0.7967816 Merredin

#> 3: 2021-04-15 1.2313931 Merredin

#> 4: 2021-05-01 0.9065767 Merredin

#> 5: 2020-04-15 0.9459766 Corrigin

#> 6: 2020-05-01 0.8105469 Corrigin

#> 7: 2021-04-15 1.0624976 Corrigin

#> 8: 2021-05-01 0.7734221 Corrigin

#> 9: 2020-04-15 0.6112117 Tamworth

#> 10: 2020-05-01 0.6290722 Tamworth

#> 11: 2021-04-15 0.7767261 Tamworth

#> 12: 2021-05-01 0.8202714 Tamworth